How To Tell If Its Skin Cancer

Pedagogy Machines to Detect Skin Cancer

Leveraging artificial intelligence to allocate medical images such as images of moles, CT scans, MRI scans and etc. as a diagnosis tool.

The diagnosis process for cancer patients looks something like this:

- A visit to your family dr. to determine if further testing is required (~3 days to schedule an date)

- If further testing is required, a skin biopsy is typically performed (~iii weeks to schedule an appointment)

- If diagnosed with skin cancer, farther testing may be recommended to provide boosted details similar the phase of cancer (~additional ii weeks)

The entire diagnosis process takes approximately 1.5 months if you lot're lucky. I've heard horror stories of people waiting for hours in the ER to see a physician, waiting several months to visit a specialist, and the list goes on.

An interesting thing I realized is that we don't really fully recognize misdiagnosis as a critical effect. It's a hidden problem and 1 that isn't discussed frequently. The misdiagnosis rate for cancer hovers around 20%. That's ane in every five patients which accounts for iii.4 meg cases every twelvemonth! According to a study conducted, 28 percent of misdiagnosed cases are life-threatening.

What if I told you that artificial intelligence tin find skin cancer and potentially whatsoever type of affliction with far better accuracy and in much less time than human beings. In the context of pare cancer, all you need to do is literally feed the machine a picture show of the mole, and boom the machine instantly gives you a diagnosis. And in fact, I coded an algorithm to exercise just that!

Understanding the dataset: MNIST HAM 10000

To train the machine learning model, I used the dataset MNIST HAM 10000. At that place are a total of 10 015 dermatoscopic images of skin lesions labeled with their respective types of skin cancer.

The images in the data-set are separated into the following seven types of skin cancer:

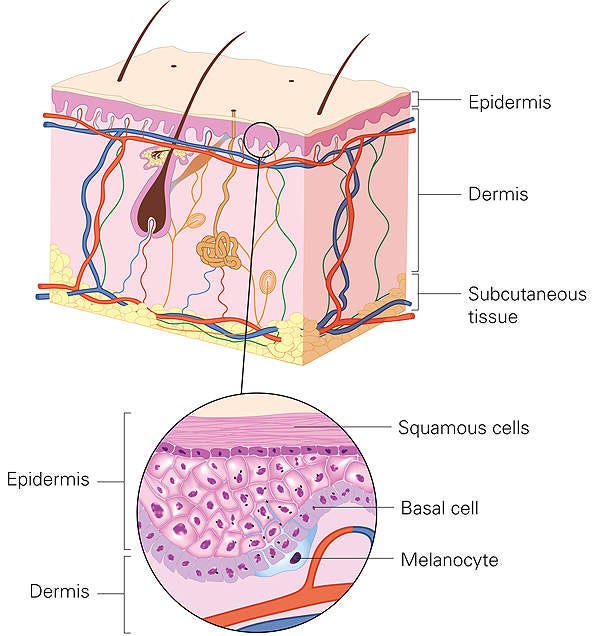

- Actinic keratosis is considered to be a noncancerous (benign) type of skin cancer. However, if left untreated, it normally develops into squamous cell carcinoma (which is cancerous).

- Dissimilar actinic keratosis, basal cell carcinoma is a cancerous blazon of skin lesion that develops in the basal cell layer located in the lower part of the epidermis. It is the about common type of peel cancer accounting for 80% of all cases.

- Benign keratosis is a noncancerous and slow-growing blazon of skin cancer. They can be left untreated as they are typically harmless.

- Dermatofibromas are besides noncancerous and usually harmless, thus no handling is required. It is commonly found pinkish in color and appears like a round bump.

- Melanoma is a type of malignant peel cancer that originated from melanocytes, cells that are responsible for the pigment of your skin.

- Melanocytic nevi are a benign type of melanocytic tumor. Patients with melanocytic nevi are considered to exist at a higher take chances of melanoma.

- Vascular lesions are equanimous of a wide range of skin lesion including cherry angiomas, angiokeratomas, and pyogenic granulomas. They are similarly characterized as being red or purple in color and frequently appear equally a raised bump.

For my model, I applied a convolutional neural network (CNN) which is a deep learning algorithm to railroad train my data. CNNs are specially established in the area of image classification.

Only wait, what is Deep Learning?

Deep learning is a sub-grade of machine learning that is inspired by the neural connectivity of the encephalon.

Similar to the compages of the brain, deep learning makes it possible for different nodes/neurons (the circles in the motion-picture show) in each layer to be connected to the consecutive layers.

There are 3 principal types of layers in deep learning:

- Input Layer: where the input information is fed into the model

- Hidden Layers: responsible for discovering the meaning of the information

- Output Layer: returns the predicted respond/label

There are 2 variables that are involved in interpreting the information, weights, and biases.

In the scenario in a higher place, the weight and summit are the two inputs fed into the model. The deep neural network so multiplies the input past the weight and adds the bias. To produce an output, the answer is passed through an activation function. In simple terms, activation functions are responsible for computing the output of a node given the input.

Grooming the data requires non only an algorithm, in the case of deep neural networks, it also requires defining a loss role. Loss functions allow the model to understand its prediction errors.

A Loss function is denoted as: (actual output - predicted output) ** 2

We term the process of minimizing the error rate after every iteration through the model "learning".

After every iteration, the weights and biases are updated in a way that minimizes the mistake charge per unit of the model. When training a model, the goal is to essentially minimize loss which subsequently increases accuracy as the model makes fewer and fewer mistakes. Look, but how exactly are the weights and biased refined?

For each loss data point on computed by the loss function, the average slope (a vector representing the derivative of the function) is calculated by leveraging the backpropagation algorithm. The gradient tells us how much each parameter influences the output.

The slope descent algorithm uses the backpropagation algorithm to refine the weights. Basically, gradient descent'southward goal is to find the minimum of the loss function, the point where the loss of the model is closest to 0.

When the model reaches the output layer, an activation role is used to normalize the values so they correspond to a pct. The sigmoid function is the mutual activation function used to catechumen all values into a number that is betwixt 0 and i representing the probability of each output. The output value with the highest probability is the model'southward prediction.

To sum it up, deep learning is a subset of machine learning and belongs to a class of algorithms called neural networks. The architecture of neural networks mimics how neurons in our brains are continued to each other.

The Model: Leveraging Convolutional Neural Networks

Similar to neural networks, CNNs have input layers, output layers, and are equanimous of nodes. Merely wait, how are convolutional neural networks different?

Vanilla neural networks (aka multilayer perceptron) takes a vector in as an input. For an image with a shape of 100 pixels past 100 pixels, a regular multilayer perceptron will have to compute x,000 weights for each node in the 2d layer. It you have a 10 node layer, that number apace accelerates to 100,000 weights!

Leveraging the architecture of CNNs, the number of parameters needed can exist greatly reduced. This is considering CNNs use filters which are composed of a vector of weights as its learnable parameters. This means that that size of the paradigm doesn't necessarily affect the number of "learnable" parameters.*

CNNs can take multi-channels of an epitome in as an input. Images unremarkably consist of 3 layers as they are composed of three primary colors: blood-red, blue, and green. Each pixel in each of the 3 layers constitutes a number ranging from 0 to 255 that represents the intensity of the color.

Aside from the input and output layers, just like other neural networks, convolutional neural networks includes multiple hidden layers. There are three main types of hidden layers constitute in convolutional neural networks:

- Convolutional layers

- Pooling layers

- Fully connected layers

( Unlike the fully connected neural networks, similar the vanilla neural networks (aka multilayer perceptrons), CNNs are not equally continued structure-wise. )

The convolutional layers are responsible for performing the dot product between the input and the filter. All the computational piece of work takes place in these layers. Filters are the model's "learnable" parameters as they are used to discover specific features like the edges of an object in the image; this process is also known as feature extraction. Similar to how in multilayer perceptrons, the weights are refined after every iteration, the filters (in the context of CNNs) which contains the weights are the parameters that are refined later on every training iteration. For CNNs, the almost common activation role applied is called a ReLU (Rectified Linear Unit) office and is denoted as max(0,x). This ways that for whatsoever value less than 0, the output will exist 0, and for all values above 0, the output would exist x (the input). ReLU functions output simply positive values and therefore restricts the range.

The pooling layers narrows the size of the data by reducing its dimensions. For my model, I used a specific pooling layer called max pooling. Max pooling works past comparing neurons from different layers, so extracts merely the highest value as the output, hence reducing the data'south dimensions.

Fully connected layers are similar to the subconscious layers seen in multilayer perceptrons, where nodes of 1 layer are fully connected to the nodes in the respective layers. This layer is responsible for communicating the final output.

The Nitty-Gritty (aka The Source Lawmaking)

Moving on to the fun stuff and of form, all the code!

Instead of downloading 3GB of images and then uploading it on google collaboratory which can exist tedious, I used the Kaggle API instead.

#-------------------------Kaggle API Setup--------------------- #Install kaggle library

!pip install kaggle #Make a directory called .kaggle which makes it invisible

!mkdir .kaggle import json

token = {"username":"ENTER YOUR USENAME","key":"ENTER YOUR Key"}

with open('/content/.kaggle/kaggle.json', 'w') as file:

json.dump(token, file)!cp /content/.kaggle/kaggle.json ~/.kaggle/kaggle.json

!kaggle config set -n path -v{/content}

!chmod 600 /root/.kaggle/kaggle.json

After setting up the Kaggle API, download the MNIST HAM 10000 dataset and unzip the files.

#---------------Downloading and unzipping the files-------------- #Data directory: where the files volition unzip to(destination folder)

!mkdir information

!kaggle datasets download kmader/peel-cancer-mnist-ham10000 -p information !apt install unzip !mkdir HAM10000_images_part_1

!mkdir HAM10000_images_part_2 !unzip /content/data/pare-cancer-mnist-ham10000.zip -d /content # Unzip the whole zipfile into /content/data

!unzip /content/data/HAM10000_images_part_1.null -d HAM10000_images_part_1

!unzip /content/data/HAM10000_images_part_2.zip -d HAM10000_images_part_2

#Ouputs me how many files I unzipped

!echo files in /content/data: `ls data | wc -l`

Unlike directories are made for the training dataset and the validation dataset. Seven sub-folders for the seven different labels are created within both the training and validation directory.

#-------------------Make directories for the data------------------- import os

import errno base_dir = 'base_dir' image_class = ['nv','mel','bkl','bcc','akiec','vasc','df'] #3 folders are made: base_dir, train_dir and val_dir try:

os.mkdir(base_dir)except OSError equally exc:

train_dir = bone.path.bring together(base_dir, 'train_dir')

if exc.errno != errno.EEXIST:

raise

pass

attempt:

os.mkdir(train_dir)

except OSError as exc:

if exc.errno != errno.EEXIST:

heighten

pass

val_dir = os.path.join(base_dir, 'val_dir')

endeavor:

os.mkdir(val_dir)except OSError as exc:

#make sub directories for the labels

if exc.errno != errno.EEXIST:

heighten

laissez passer

for x in image_class:

os.mkdir(train_dir+'/'+ten)

for x in image_class:

os.mkdir(val_dir+'/'+ten)

To preprocess the information, the data is split up into training data and testing data at a ix–1 ratio. The data is then moved accordingly to the binder that corresponds with its label.

#-----------------splitting data/transfering data------------------- #import libraries

import pandas as pd

import shutil df = pd.read_csv('/content/information/HAM10000_metadata.csv') # Set y as the labels

y = df['dx'] #split data

from sklearn.model_selection import train_test_split

df_train, df_val = train_test_split(df, test_size=0.i, random_state=101, stratify=y) # Transfer the images into folders, Set the image id equally the alphabetize

image_index = df.set_index('image_id', inplace=True) # Go a list of images in each of the two folders

folder_1 = os.listdir('HAM10000_images_part_1')

folder_2 = os.listdir('HAM10000_images_part_2') # Get a list of train and val images

train_list = list(df_train['image_id'])

val_list = list(df_val['image_id']) # Transfer the grooming images

for image in train_list: fname = image + '.jpg' if fname in folder_1:

#the source path

src = bone.path.bring together('HAM10000_images_part_1', fname)#the destination path

dst = os.path.join(train_dir+'/'+df['dx'][image], fname)

impress(dst)shutil.copyfile(src, dst)

if fname in folder_2:

#the source path

src = bone.path.join('HAM10000_images_part_2', fname) #the destination path

dst = os.path.join(train_dir, fname)shutil.copyfile(src, dst)

# Transfer the validation images

for paradigm in val_list: fname = paradigm + '.jpg' if fname in folder_1:

#the source path

src = bone.path.join('HAM10000_images_part_1', fname) #the destination path

dst = os.path.join(val_dir+'/'+df['dx'][epitome], fname)shutil.copyfile(src, dst)

if fname in folder_2:

# destination path to image

#the source path

src = os.path.join('HAM10000_images_part_2', fname)

dst = bone.path.join(val_dir, fname)

# copy the image from the source to the destination

shutil.copyfile(src, dst)

y_valid.suspend(df['dx'][image]) # Check how many grooming images are in train_dir

print(len(os.listdir('base_dir/train_dir')))

print(len(os.listdir('base_dir/val_dir'))) # Check how many validation images are in val_dir

print(len(os.listdir('information/HAM10000_images_part_1')))

print(len(os.listdir('data/HAM10000_images_part_2')))

I used an image generator to apply random transformations to my images. Additionally, a cracking feature with using an prototype generator is that it automatically resizes the data to the dimensions given in the parameter target_size.

#--------------image generator---------------

from keras.preprocessing.image import ImageDataGenerator

import keras

print(df.caput())

image_class = ['nv','mel','bkl','bcc','akiec','vasc','df'] train_path = 'base_dir/train_dir/'

valid_path = 'base_dir/val_dir/'

print(os.listdir('base_dir/train_dir'))

print(len(os.listdir('base_dir/val_dir'))) image_shape = 224 train_datagen = ImageDataGenerator(rescale=ane./255)

val_datagen = ImageDataGenerator(rescale=1./255) #declares information generator for train and val batches

train_batches = train_datagen.flow_from_directory(train_path,

target_size = (image_shape,image_shape),

classes = image_class,

batch_size = 64

) valid_batches = val_datagen.flow_from_directory(valid_path,

target_size = (image_shape,image_shape),

classes = image_class,

batch_size = 64 )

We all assume the hard part is coding the model, but information technology's really everything above (aka preprocessing the data).

Instead of using a convolutional neural network, I leveraged a type of architecture called Mobile Internet. Information technology's a pre-trained model that is trained on the dataset ImageNet, which has over 14 million images. For the purpose of detecting skin cancer, I constructed several layers on superlative of the Mobile Cyberspace and then trained it on the MNIST: HAM 10000 dataset.

The master reason why I used Mobile Internet instead of regular convolutional neural networks is due to the minimal computational power needed equally it reduces the number of learnable parameters and is designed to be "mobile" friendly.

#-------------------------------model------------------------------

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras.layers import Conv2D, MaxPool2D, Dropout, Flatten

from keras.callbacks import ReduceLROnPlateau

from keras.models import Model mobile = keras.applications.mobilenet.MobileNet()

ten = mobile.layers[-6].output # Add a dropout and dumbo layer for predictions

x = Dropout(0.25)(x)

predictions = Dumbo(7, activation='softmax')(x)

print(mobile.input)

internet = Model(inputs=mobile.input, outputs=predictions) mobile.summary()

for layer in net.layers[:-23]:

layer.trainable = False net.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']) learning_rate_reduction = ReduceLROnPlateau(monitor='val_acc', patience=3, verbose=i, gene=0.5, min_lr=0.00001) history = net.fit_generator(train_batches, epochs=10)

After preparation the model, a seventy% accuracy was achieved when performing on the test batches. With a larger dataset, the accurateness can easily exist enhanced. Bogus intelligence has and then much potential to disrupt the healthcare industry. Imagine having artificial intelligence diagnosis practically whatsoever illness way better and faster than human beings, that'southward insane!

This isn't science fiction, the possibilities of AI are endless! AI is already revolutionizing healthcare in China. A hospital in China introduced a programme called AI-Force, which leverages AI-enabled machines that are able to find 30 chronic diseases with 97% accurateness!

Key Takeaways

- Deep learning is inspired past the neural connectivity of the encephalon in that every node in each layer is continued to the side by side layer

- Convolutional neural networks have 3 chief types of hidden layers: convolutional layer, pooling layer, and fully connected layer

- A filter (for CNNs) is used to extract features from the data

Don't forget to:

- Clap this commodity if yous enjoyed information technology

- Connect with me on LinkedIn

Source: https://medium.com/analytics-vidhya/teaching-machines-to-detect-skin-cancer-bd165566f0fe

Posted by: taorminapricandere.blogspot.com

0 Response to "How To Tell If Its Skin Cancer"

Post a Comment